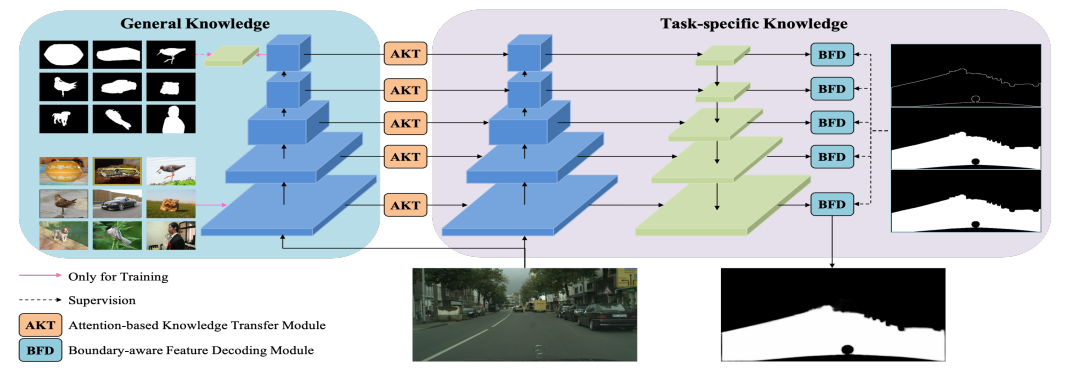

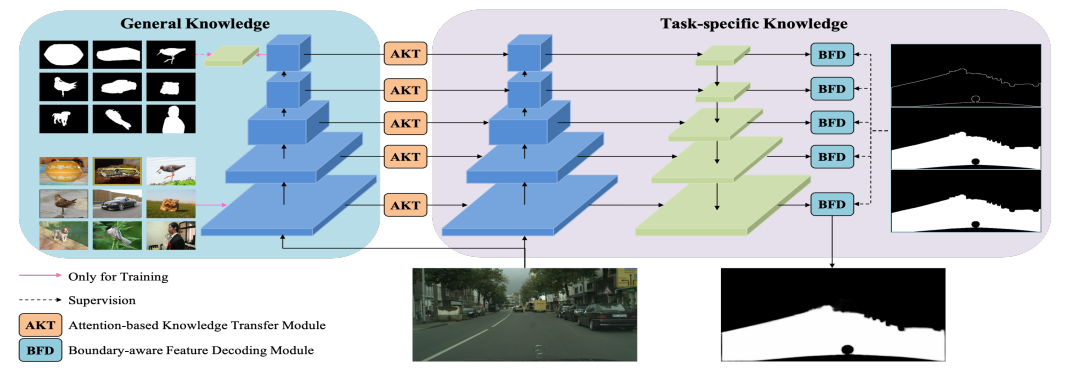

The framework of the baseline

The framework of the baseline

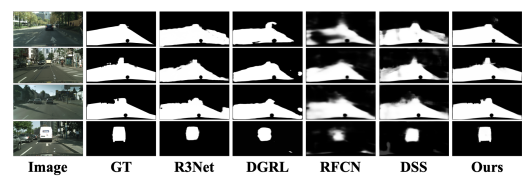

Recently, general salient object detection (SOD) has made great progress with the rapid development of deep neural networks. However, task-aware SOD has hardly been studied due to the lack of task-specific datasets. In this paper, we construct a driving task-oriented dataset where pixel-level masks of salient objects have been annotated. Comparing with general SOD datasets, we find that the cross-domain knowledge difference and task-specific scene gap are two main challenges to focus the salient objects when driving. Inspired by these findings, we proposed a baseline model for the driving task-aware SOD via a knowledge transfer convolutional neural network. In this network, we construct an attention-based knowledge transfer module to make up the knowledge difference. In addition, an efficient boundary-aware feature decoding module is introduced to perform fine feature decoding for objects in the complex task-specific scenes. The whole network integrates the knowledge transfer and feature decoding modules in a progressive manner. Experiments show that the proposed dataset is very challenging, and the proposed method outperforms 12 state-of-the-art methods on the dataset, which facilitates the development of task-aware SOD.